Research Spotlight: Corinne Huggins-Manley

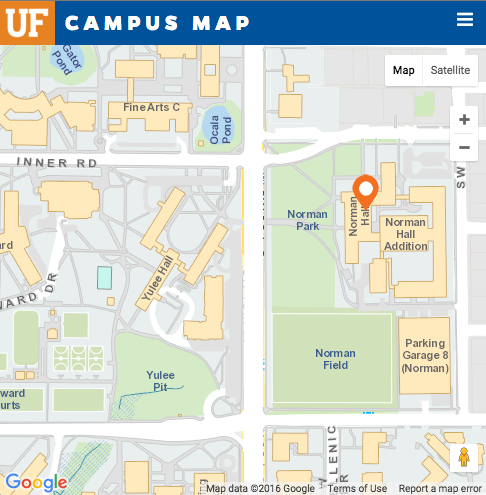

Q&A with Corinne Huggins-Manley, Ph.D., Associate Professor of Research and Evaluation Methodology, Associate Director of Human Development and Organizational Studies in Education, University of Florida Research Foundation Professor

What basic questions does your research seek to answer?

All of my methodological research seeks to overcome challenges to the practice and interpretation of quantitative measurements of latent constructs. Outside of academia, it is taken for granted that we can assign numbers to concepts such as “academic achievement” or “self-esteem,” and it is often assumed that those numbers are either accurate representations of such concepts or inaccurate only within some quantifiable margin of error.

There is little appreciation for the statistical challenges to measurement and the process of validating inferences made from such measurements. Inside the world of academia, there is more awareness about the challenges to quantifying latent constructs but still some difficulties in recognizing and meeting those challenges. Research in educational measurement is aimed at improving our abilities to overcome such challenges so that valid information can be gleaned from the measurement of education-related constructs. I aim to advance the field of educational measurement with research on topics such as item response theory, fairness in reporting subgroup test scores within and across schools and teachers, scale development and score use validity, and statistical model building that can help practitioners to overcome issues such as non-response bias.

What makes your work interesting?

My work is interesting because it directly tackles many of the problems that occur with respect to measurement in educational research, policy, and practice. In educational research, the ability to use statistics to analyze data and answer research questions hinges in large part on the measurement quality of the variables being studied. However, measurement courses are often not required for doctoral students in the social sciences and many researchers have noted that a lack of attention to measurement has become the Achilles’ heel to social science research. In educational policy and practice, many measurement demands have been mandated onto educators over the past few decades, often by persons who are not trained in measurement or the validity of test score interpretations. The ramifications of such policies have been widely felt in the educational community, and I believe many of them stem from the lack of understanding about what measurement is and what it can (and cannot) tell us about students, teachers, and learning. I focus my research on topics that can improve these conditions in educational research, policy, and practice.

What are you currently working on?

I have four projects in progress that I am very excited about. One is related to assumptions of item response theory models and how we can best test for violations of them. Increasing the availability of accurate methods for testing measurement model assumptions is critical for ensuring the appropriate use and interpretations of parameter estimates produced from such models. A second is related to the development of two statistical models that allow for the incorporation of simultaneous nominal and ordinal within-item response data. The availability of models such as these would allow practitioners and researchers to more easily and appropriately model non-responses on tests and surveys such as “not applicable” responses on Likert scales. The third is an applied measurement project in which I am co-developing an adaptive, diagnostic assessment of reading skills for students in grades 3 to 5. The fourth is a continuation of my research on subpopulation item parameter drift and its relationship to differential item functioning and equating invariance. These three phenomena are statistical manifestations of measurement bias, which pose problems for achieving standards of fairness in large-scale educational testing.