Fulbright Awareness Month March 10 to April 10, 2017

The International Center is pleased to announce its celebration of Fulbright Awareness Month, March 10 to April 10, 2017. In collaboration with the UF Fulbright Lectures Committee, UF Honors, the Graduate School, and the North Florida Fulbright Alumni Association, multiple activities for students, scholars, faculty, and staff will provide opportunities to learn about the Fulbright application process and to hear about the experiences of past Fulbright scholars and students.

Additionally this year, information sessions for faculty interested in applying for the Fulbright U.S. Scholar Program will be led by Dr. Andy Riess, Assistant Director of Outreach at the Council for International Exchange for Scholars (CIES) in Washington, D.C. Dr. Riess will also be available for consultations with interested faculty applicants.

Below is the calendar of UF events programmed for Fulbright Awareness Month:

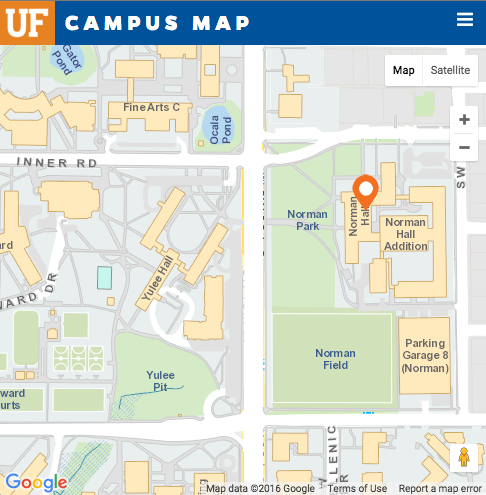

- Fulbright U.S. Scholar Program Info Sessions, March 13th, 9am and 2pm, UF International Center, (Individual Consultations Available on the same day) (The Hub)

- U.S. Student Program Info Sessions, March 15th, 4:05 pm, UF International Center and March 30th, 3:00 pm, UF International Center

- “Things to Consider When Applying for a Fulbright and Preparing to Go,” Workshop by Karen Reed (for faculty), March 15th, 10:00 am, UF International Center

- President’s Fulbright Reception (by invitation only), March 15th, 5:30 pm, University House

- “How to Prepare a Successful Fulbright Application,” April 7th, 3:30 pm, UF International Center, by Anna Calluori and John Freeman (for faculty)

Fulbright grants and fellowships are available in all fields of study and in many world regions. For more information, see http://ufic.ufl.edu/Fulbright/index.html

To learn more about Fulbright programs and activities at UF, contact the appropriate Fulbright coordinator listed below:

Regan Garner, rlgarner@ufl.edu, U.S. Student Program

Debra Anderson, danderson@ufic.ufl.edu, Foreign Student Program

Claire Anumba, canumba@ufic.ufl.edu, U.S. Scholar Program (UF Faculty)

Scott Davis, sdavis@ufic.ufl.edu, Visiting Scholar Program

Matt Mitterko, mmitterko@aa.ufl.edu, Non-resident Tuition Waiver

Charlie Guy, clguy@ufl.edu, UF Fulbright Lecture Series Committee

Carlos Maeztu, maeztu@gmail.com, North Florida Chapter Fulbright Association

For more information, please contact Mabel Cardec at mcardec@ufic.ufl.edu