Research Spotlight: Joni Williams Splett

Q & A with Joni Williams Splett, Ph.D., Assistant Professor in the School of Special Education,  School Psychology, and Early Childhood Studies

School Psychology, and Early Childhood Studies

What basic questions does your research seek to answer?

How do we improve the mental health and wellness of children and youth? Through my research, I seek to identify strategies that help all children, youth, and their families achieve and maintain positive mental health outcomes. On a systems level, my research is focused on meaningfully interconnecting child-serving systems, such as schools and community mental health agencies, so that resources are multiplicatively enhanced and the delivery of a continuum of evidence-based mental practices is improved. At the student level, my research focuses on preventing and reducing aggressive behaviors through the development and testing of intervention programs for children, families, and schools.

What makes your work interesting?

Children’s mental health is gaining more national and international attention. It is an area most people can agree is important. My research includes systems- and student-level questions with emphasis on the inclusion and integration of families, communities, and schools. In this way, I seek to use resources more effectively to improve access to mental health promotion, prevention and intervention, and associated outcomes. My research questions, thus, include intervention effectiveness, as well as resource allocation, access, and economic impact.

What are you currently working on?

My current systems-level work includes three grant-funded, national projects, while my student-level intervention research program is focused on revising and testing GIRLSS (Growing Interpersonal Relationships through Learning and Systemic Support), a group counseling intervention to reduce relational aggression.

Currently, my largest project is a four-year, multisite randomized control trial of the Interconnected Systems Framework (ISF) funded by the National Institute of Justice (NIJ). I am co-principal investigator of the study and PI of the Florida site. The ISF is a structure and process for blending education and mental health systems through a multitiered structure of mental health promotion, prevention, and intervention. It interconnects the multitiered system features of School-Wide Positive Behavioral Interventions and Supports (PBIS) with the evidence-based mental health practices of community mental health agencies in the school setting. Specifically, key components of the ISF include interdisciplinary collaboration and teaming, data-based decision making, and evidence-based mental health promotion, prevention, and intervention practices. We are in the first of two implementation years for the NIJ-funded randomized control trial and have one year for follow up and sustainability tracking.

My second systems-level project is the development and validation of an action planning fidelity measurement tool for the ISF, called the ISF Implementation Inventory (ISF-II; Splett, Perales, Quell, Eber, Barrett, Putnam, & Weist, 2016). During phase one of this project, we piloted the ISF-II with three school districts in three states to examine the tool’s content and social validity. We revised the measure accordingly and are now testing its reliability, construct validity, and social validity in phase two. I am leading the phase two study in collaboration with the National PBIS Technical Assistance Center’s ISF workgroup, and it currently includes more than 10 school districts in seven states. We aim to include more than 100 schools in our phase two psychometric study of the ISF-II.

The third systems-level project that I am advancing examines the adoption considerations and implementation outcomes of universal mental health screening in schools. Mental health screening is a key data-based decision making component of the ISF, as it is hypothesized to improve identification and access to mental health services for children and youth. Currently, I have several papers under review or in preparation in this area. My major project includes examining the intervention receipt outcomes in schools using a mental health screener. Schools have limited intervention resources, and it is unlikely that every student identified as in need by a universal mental health screener will receive services. My research team is using real-life screening data from schools implementing the ISF, combined with service receipt, teacher survey, and extant student records data, to examine the characteristics of students who receive intervention versus those who do not but are identified by the screener as in need. Our findings will inform recommendations to schools and policy makers for improving the implementation strategies of these screening tools.

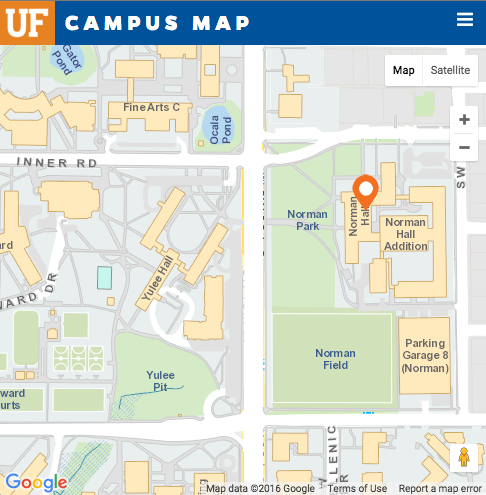

At the student level, I am excited to be revising and testing the referral and intervention protocol of GIRLSS. I developed GIRLSS during a practicum placement in graduate school and tested it for my dissertation, but was unable to advance the work during my internship or postdoctoral positions. At UF, I have developed a partnership with Stephen Smith, Ph.D., in the Special Education program, who has successfully developed and tested other interventions to prevent and reduce aggressive behaviors in the school setting. We lead a team of doctoral students who have revised the group counseling curriculum of GIRLSS and conducted a field trial of it with middle school girls attending a local summer camp. Currently, we are writing grant applications to fund further development and testing of our revised referral and intervention protocol.